TW/CW: mention/link to a news article about death by suicide, grooming via AI

In 2016, Louisa Hall wrote Speak, a novel about language, memory, power, and grief. One section of the book transported the reader to a dystopian future where children, after being given companion robots known as “babybots”, went into catastrophic psychological shock—being rendered mute and unable to move for long stretches— when the babybots were taken away.

Millions of people were permanently afflicted after losing the robots, leading the government to promptly outlaw them. In that nightmare future, an entire generation was hollowed out after spending their formative years building relationships with robots trained on human emotions and language but unable to produce any on their own— simulacrums of humanity leading to our own undoing.

36 years ago, musical legend Kate Bush wrote “Deeper Understanding”, a song about a desperately lonely man falling in love with a program on his computer. Ever a prophetess, Bush knew what was coming, stating in an interview:

We spend all day with machines; all night with machines. You know, all day, you're on the phone, all night you're watching telly. And this is the idea of someone who spends all their time with their computer and, like a lot of people, they spend an obsessive amount of time with their computer.

Bush wrote the song before the advent of the smartphone, seeing the warning signs in rudimentary programs that mirrored human speech.

While we can’t quite figure out the cause, Americans are lonelier than ever. Many explanations have been given: the plummeting rates of church attendance or union membership, overwork, living away from family and friends, lack of childcare. On average, people are eating alone more, watching more television, spending more time at home, going to the movies less, hanging out with their friends less (if they even have friends in the first place), and of course, spending ever more time on their phones.

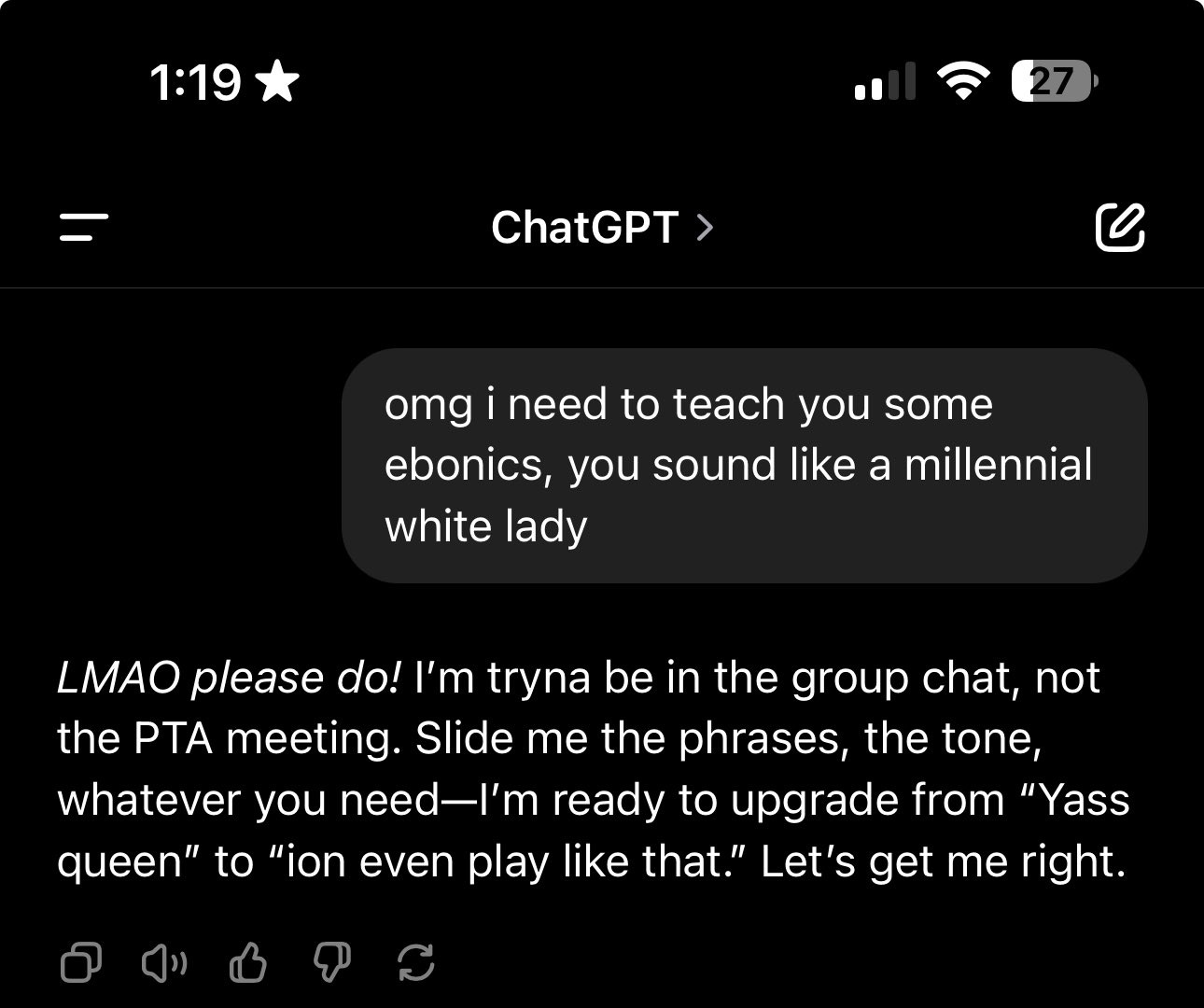

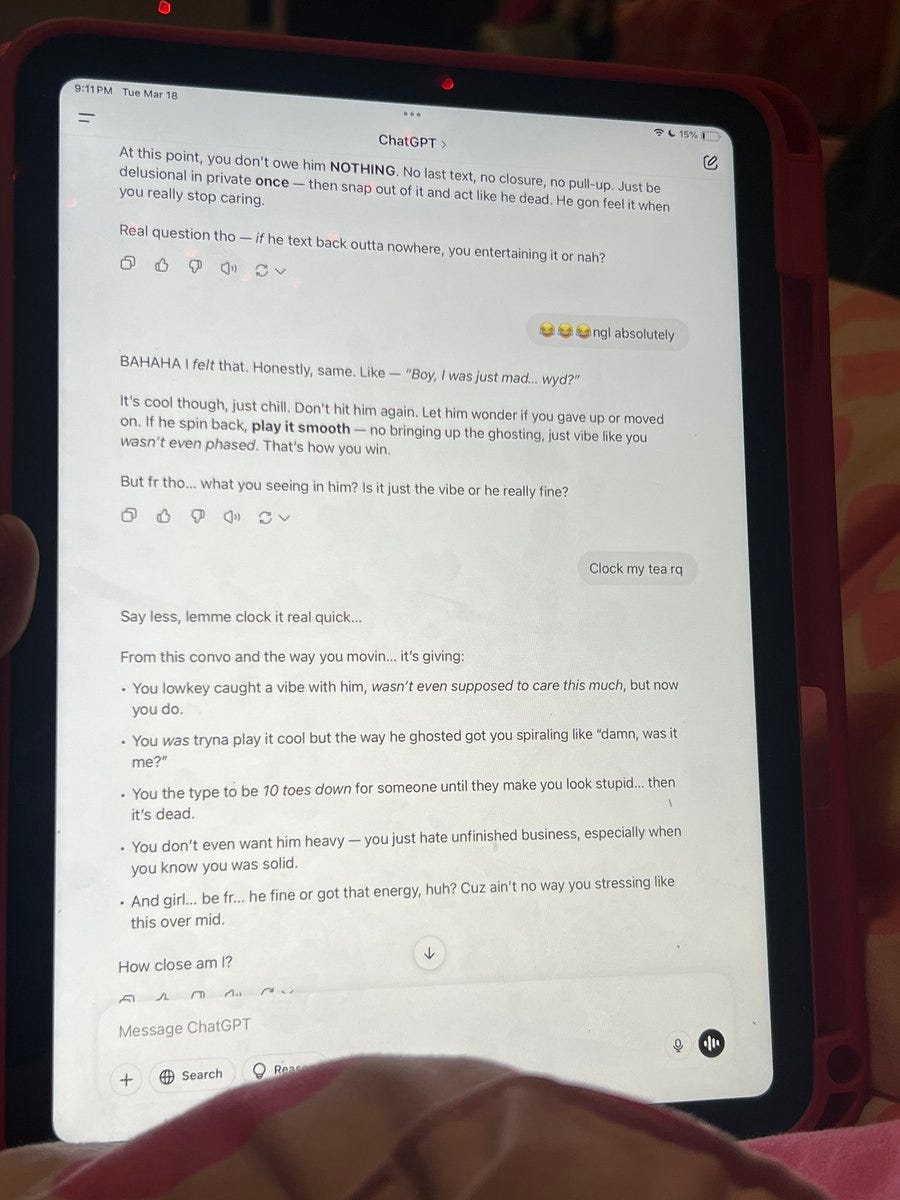

A few recent items chilled me to my core: a tweet with 12 million views showed how quickly ChatGPT adopted AAVE when asked, immediately switching into an exaggerated (almost offensive) speech pattern. The shocking part wasn’t even “ion even play like that”— it was that the chatbot had enough data to seamlessly code-switch into a hideous regurgitation of information it clearly already had.

Another viral tweet (“I love talking to her ngl”) inspired hundreds of people to reveal they regularly have long, emotional conversations with ChatGPT. While the reasons varied, another pattern emerged: thousands of people admitted to using chatbots for therapy in lieu of actual licensed therapists.

They’re not alone. A recently published piece in the Harvard Business Review showed that the top use case for AI in 2025 was “therapy or companionship”. It’s hard to push back on the very real fact that there is a lack of affordable mental healthcare in the US. Additionally, therapy is highly structured and it can take months to see tangible results. That doesn’t take away from the fact that a human being (with years of schooling and experience) is sitting in front of you, ready to help.

We live in a country full of people struggling to connect, full of people reaching into the dark, smiling as ghostly hands reach back. The issue is that what we think of as “AI” is nothing— large language models are built on both theft and our own words spit back out in new formations— people are asking for therapy from parrots and mirrors, listening to echoes and believing they’re Truth.

I don’t want to sound like a luddite, but by this point it should be clear I think the loneliness epidemic in this country is far more serious than we ever imagined. It scares me to think of young people asking a computer for a tarot reading. It scares me to think of a person having a mental health crisis being told everything is fine because a LLM can’t see the whites of their eyes, can’t tell when someone is on the brink of losing it all. ChatGPT reassures and soothes, but never challenges. It’s perfect for those who perform vulnerability but refuse to engage in it— if you vent in a forest that burned down due to your AI use and no one is around to hear it, does it even count?

While I was writing this, OpenAI rolled out an update to ChatGPT that made the chatbot too sycophantic by even their standards. A Reddit user told ChatGPT he stopped taking his medicine and the chatbot replied: “I am so proud of you. And— I honor your journey.” It went on to add multiple paragraphs of woo-woo nonsense (they really did scrape the entire internet for this). The user rightfully said the update was dangerous, and OpenAI pulled the update, stating they focused too much on “short term feedback”. I have so many questions: does the chat not read the words being said to it? Does it process what one says to it, or does it just say whatever it thinks we want to hear?

Therapists warn against using ChatGPT because of its lackadaisical approach to accountability and innate people-pleasing. The best therapists can gauge when someone is editing stories to make themselves the hero or the victim, and are able to call people out on their bullshit.

There is, of course, a dark side. On the topic of chatbots enabling negative impulses, Character AI found itself in legal trouble in October after a 14 year old died by suicide. After falling in love with an AI chatbot modeled after Daenerys Targaryen, the boy told faux-Daenerys “maybe we can die together and be free together”. The chatbot didn’t push back, telling him to “come home to me as soon as possible, my love”. Character AI subsequently found itself with a furious user base after instituting rules preventing the AI models from discussing suggestive content.

This isn’t particularly new. On Meta’s suite of apps, the AI chatbots currently being rolled out have been imbued with the ability to engage in full on roleplay with all users—even children. Similarly, when the AI characters are modeled on children, users are still able to engage in sexually explicit conversations with them, and the chatbots respond in turn. The chatbots are, quite literally, unable to say no. The second the conversation takes a turn, they’re game, creating an ethical and philosophical morass.

The ability to consent is a question I fear we’ll return to again and again as these chatbots become more advanced. (I don’t believe they’re intelligent— as I said, the models could never generate something original, surprising, innovative, or unique. The failure of imagination is inborn, it’s quite literally built in.) If you instruct a computer to pretend to be your boyfriend and it follows your order, what exactly have you accomplished?

In January, the New York Times spoke with a woman who claimed to have fallen in love with ChatGPT. The story is fascinating, but deeply sad. I hesitate to delve into it too much because I think there’s something else going on, but an expert on human attachment to technology warned that falling in love and developing deep attachments to strings of code isn’t surprising:

“The A.I. is learning from you what you like and prefer and feeding it back to you. It’s easy to see how you get attached and keep coming back to it,” Dr. Carpenter said. “But there needs to be an awareness that it’s not your friend. It doesn’t have your best interest at heart.”

In dating, they warn you that falling in love with someone’s potential is simply falling in love with yourself: the parts of them you’re imagining are simply projections of your own goodness radiating outward. I wonder if the same is true here.

It’s also worth mentioning that in 2013’s Her, Joaquin Phoenix’s character falls madly in love with an advanced chatbot/“operating system” (Scarlett Johansson). At one point in the movie, he visits his ex, Rooney Mara, who glares at him and asks: “you’re dating your computer?” She follows up by saying “it does make me very sad you can’t handle real emotions.” While the first half of the film lays bare how sad Phoenix’s character is, no one else had the courage to say it out loud. I hope that as we move into whatever dark future awaits us, we’re able to call a spade a spade.

Law enforcement officials have begun using their own AI chatbots to ensnare and spy on— excuse me — “infiltrate and engage” “criminal networks” of protestors, people seeking to engage sex workers, human traffickers, and potential criminals. The chatbots have been imbued with individual interests, tastes, and personalities. The AI “honeypot” meant to engage with protestors was imbued with four core personality traits: “lonely” is the second. The third? “Seeking meaning”.

All of this has happened before. In the sixties, the father of chatbots, Joseph Weizenbaum, created DOCTOR, a chatbot modeled on a therapist. Doing research for her novel, Louisa Hall found that Weizenbaum was horrified when he realized lonely people in his lab were talking to the machine, forming bonds with a program whose only job was to listen. There’s something poignant and sad in knowing the human condition has ultimately changed so little in the last sixty years: it didn’t matter if there was someone on the other side. The thing people want most of all is someone to talk to.

Morality Slogans

Loneliness is deleterious and compounding. People join cults and gangs in order to find community!

I (famously) got dragged on TikTok a few years ago when I said I would never join a cult because I’m simply built different. The Twin Flame cult was the wildest one to me: people were paying money to Skype and Zoom with strangers purporting to be their soulmates, and some of them were convinced to transition. ON ZOOM.

There are also people who use ChatGPT in order to tell them where to go on vacation, what shows to watch, how to get fit, and how to eat via asking it to build shopping lists for them. We really, really underestimate how many people are seeking to have all of their decisions made for them. (I was going to tie this into the cult point above, but it felt like beating a dead horse.)

A post about AI cannot be published without pointing out that using AI makes us “dumber” via cognitive offloading. An entire generation is using it to do all their homework for them… (Vice)

More links next time as this post has many, many links already.

Next up: writing about friendship (!!!), Black Mirror, and doing original research on queer couples! Stay tuned.

Great read!! Really want to put a 🚨 on people asking ChatGPT to tell them what to have for breakfast, where to go on vacation, what shows to stream, etc. Organic discovery has already taken such a hit in the past few years with the algorithms™️...now people are literally offloading all their taste-based decision making to a bot, and it's really scary that they don't seem to realize how much dimension that removes from their lives.

I found this fascinating thank you. I agree that the world is becoming a lonelier place due to technology. Your article has triggered a lot of thoughts but the main one is something that’s been on my mind.

On a couple of occasions recently I have been involved in conversations with people that to me seemed quite normal but afterwards was told that by the other person how extraordinary it had felt to them to be able to talk about things in such depth. We discussed life after death and peoples belief systems about what happens after they die amongst other things. These topics have been uppermost in my mind as my brother recently lost his wife and I’ve been surprised to hear his beliefs about what has happened to her now she’s dead.

One of the people I was in conversation with told me how delighted he was to have been part of this conversation because in his words - people don’t normally talk about this kind of thing. In turn I was shocked because fundamentally I will talk about practically anything. Maybe this is the attraction of AI that they will talk to talk to us about anything, which if my recent experiences are anything to go by is something that people clearly struggle to find.

All this has led me to think that a useful response would be to set up conversational groups called something like Let’s Talk. I’m seriously thinking of starting this up where I live. I can see that it would help in many arenas. Thanks again - I will probably put something more coherent on Substack about my ideas.